Summary

Proxmox VE 7.4 to 8.3のupgradeメモが出てきたので置いておきます。

基本は公式のアップグレード手順通り。

https://pve.proxmox.com/wiki/Upgrade_from_7_to_8

多分、実際に作業したのは2024年11月末くらいのはず。

Check Proxmox VE versions

pve-01

1

2

|

root@pve01:~# pveversion

pve-manager/7.4-19/f98bf8d4 (running kernel: 5.15.158-2-pve)

|

pve-02

1

2

|

root@pve02:~# pveversion

pve-manager/7.4-19/f98bf8d4 (running kernel: 5.15.158-2-pve)

|

pve-03

1

2

|

root@pve03:~# pveversion

pve-manager/7.4-19/f98bf8d4 (running kernel: 5.15.158-2-pve)

|

Ceph

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

root@pve01:~# ceph versions

{

"mon": {

"ceph version 17.2.7 (29dffbfe59476a6bb5363cf5cc629089b25654e3) quincy (stable)": 3

},

"mgr": {

"ceph version 17.2.7 (29dffbfe59476a6bb5363cf5cc629089b25654e3) quincy (stable)": 3

},

"osd": {

"ceph version 17.2.7 (29dffbfe59476a6bb5363cf5cc629089b25654e3) quincy (stable)": 3

},

"mds": {

"ceph version 17.2.7 (29dffbfe59476a6bb5363cf5cc629089b25654e3) quincy (stable)": 3

},

"overall": {

"ceph version 17.2.7 (29dffbfe59476a6bb5363cf5cc629089b25654e3) quincy (stable)": 12

}

}

|

Continuously use the pve7to8 checklist script

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

|

root@pve01:~# pve7to8 --full

= CHECKING VERSION INFORMATION FOR PVE PACKAGES =

Checking for package updates..

PASS: all packages up-to-date

Checking proxmox-ve package version..

PASS: proxmox-ve package has version >= 7.4-1

Checking running kernel version..

PASS: running kernel '5.15.158-2-pve' is considered suitable for upgrade.

= CHECKING CLUSTER HEALTH/SETTINGS =

PASS: systemd unit 'pve-cluster.service' is in state 'active'

PASS: systemd unit 'corosync.service' is in state 'active'

PASS: Cluster Filesystem is quorate.

Analzying quorum settings and state..

INFO: configured votes - nodes: 3

INFO: configured votes - qdevice: 0

INFO: current expected votes: 3

INFO: current total votes: 3

Checking nodelist entries..

PASS: nodelist settings OK

Checking totem settings..

PASS: totem settings OK

INFO: run 'pvecm status' to get detailed cluster status..

= CHECKING HYPER-CONVERGED CEPH STATUS =

INFO: hyper-converged ceph setup detected!

INFO: getting Ceph status/health information..

WARN: Ceph health reported as 'HEALTH_WARN'.

Use the PVE dashboard or 'ceph -s' to determine the specific issues and try to resolve them.

INFO: checking local Ceph version..

PASS: found expected Ceph 17 Quincy release.

INFO: getting Ceph daemon versions..

PASS: single running version detected for daemon type monitor.

PASS: single running version detected for daemon type manager.

PASS: single running version detected for daemon type MDS.

PASS: single running version detected for daemon type OSD.

PASS: single running overall version detected for all Ceph daemon types.

WARN: 'noout' flag not set - recommended to prevent rebalancing during upgrades.

INFO: checking Ceph config..

= CHECKING CONFIGURED STORAGES =

PASS: storage 'cephfs' enabled and active.

PASS: storage 'local' enabled and active.

PASS: storage 'local-lvm' enabled and active.

PASS: storage 'rdb_ct' enabled and active.

PASS: storage 'rdb_vm' enabled and active.

PASS: storage 'www' enabled and active.

INFO: Checking storage content type configuration..

PASS: no storage content problems found

PASS: no storage re-uses a directory for multiple content types.

= MISCELLANEOUS CHECKS =

INFO: Checking common daemon services..

PASS: systemd unit 'pveproxy.service' is in state 'active'

PASS: systemd unit 'pvedaemon.service' is in state 'active'

PASS: systemd unit 'pvescheduler.service' is in state 'active'

PASS: systemd unit 'pvestatd.service' is in state 'active'

INFO: Checking for supported & active NTP service..

PASS: Detected active time synchronisation unit 'chrony.service'

INFO: Checking for running guests..

WARN: 6 running guest(s) detected - consider migrating or stopping them.

INFO: Checking if the local node's hostname 'pve01' is resolvable..

INFO: Checking if resolved IP is configured on local node..

PASS: Resolved node IP '192.168.122.26' configured and active on single interface.

INFO: Check node certificate's RSA key size

PASS: Certificate 'pve-root-ca.pem' passed Debian Busters (and newer) security level for TLS connections (4096 >= 2048)

PASS: Certificate 'pve-ssl.pem' passed Debian Busters (and newer) security level for TLS connections (2048 >= 2048)

PASS: Certificate 'pveproxy-ssl.pem' passed Debian Busters (and newer) security level for TLS connections (2048 >= 2048)

INFO: Checking backup retention settings..

PASS: no backup retention problems found.

INFO: checking CIFS credential location..

PASS: no CIFS credentials at outdated location found.

INFO: Checking permission system changes..

INFO: Checking custom role IDs for clashes with new 'PVE' namespace..

PASS: no custom roles defined, so no clash with 'PVE' role ID namespace enforced in Proxmox VE 8

INFO: Checking if LXCFS is running with FUSE3 library, if already upgraded..

SKIP: not yet upgraded, no need to check the FUSE library version LXCFS uses

INFO: Checking node and guest description/note length..

PASS: All node config descriptions fit in the new limit of 64 KiB

PASS: All guest config descriptions fit in the new limit of 8 KiB

INFO: Checking container configs for deprecated lxc.cgroup entries

PASS: No legacy 'lxc.cgroup' keys found.

INFO: Checking if the suite for the Debian security repository is correct..

PASS: found no suite mismatch

INFO: Checking for existence of NVIDIA vGPU Manager..

PASS: No NVIDIA vGPU Service found.

INFO: Checking bootloader configuration...

SKIP: System booted in legacy-mode - no need for additional packages

INFO: Check for dkms modules...

SKIP: could not get dkms status

= SUMMARY =

TOTAL: 45

PASSED: 39

SKIPPED: 3

WARNINGS: 3

FAILURES: 0

ATTENTION: Please check the output for detailed information!

|

Check warnings

結論としては致命的なものは無しとみなして、処理を続行します。

以下は個別の話題。

1

2

|

WARN: Ceph health reported as 'HEALTH_WARN'.

Use the PVE dashboard or 'ceph -s' to determine the specific issues and try to resolve them.

|

これはたまたまplacement groupsのサイズがイマイチだっただけなので無視。

1

2

3

4

5

|

root@pve01:~# ceph -s | head -4

cluster:

id: 379c9ad8-dd15-4bf1-b9fc-f5206a75fe3f

health: HEALTH_WARN

2 pools have too many placement groups

|

Cephのクラスタに関しては、今回作業中の大きな変更は無いので影響なしとみなします。

1

|

WARN: 'noout' flag not set - recommended to prevent rebalancing during upgrades.

|

1台ずつアップグレードする際、ライブマイグレーションによってVMを別のノードに寄せる作業をするので、今は無視します。

1

|

WARN: 6 running guest(s) detected - consider migrating or stopping them.

|

Start upgrade

Update Debian Base Repositories to Bookworm

以下のファイルが修正対象です。

pve-no-subscriptionが /etc/apt/sources.list.d/pve-no-subscription.list に書いてあるのは手動で追加した時に名残ですね。

WebUIからNo subscriptionが導入できるようになってからは /etc/apt/sources.list に書かれていると思います。

1

2

3

4

5

6

7

|

root@pve01:~# grep -r bullseye /etc/apt/sources.list*

/etc/apt/sources.list:deb http://deb.debian.org/debian bullseye main contrib

/etc/apt/sources.list:deb http://deb.debian.org/debian bullseye-updates main contrib

/etc/apt/sources.list:deb http://security.debian.org bullseye-security main contrib

/etc/apt/sources.list.d/pve-no-subscription.list:deb http://download.proxmox.com/debian/pve bullseye pve-no-subscription

/etc/apt/sources.list.d/ceph.list:deb http://download.proxmox.com/debian/ceph-quincy bullseye main

/etc/apt/sources.list.d/pve-enterprise.list:#deb https://enterprise.proxmox.com/debian/pve bullseye pve-enterprise

|

一気に置き換えていきます。

1

2

3

4

|

sed -i 's/bullseye/bookworm/g' /etc/apt/sources.list

sed -i 's/bullseye/bookworm/g' /etc/apt/sources.list.d/pve-enterprise.list

sed -i 's/bullseye/bookworm/g' /etc/apt/sources.list.d/pve-no-subscription.list

echo "deb http://download.proxmox.com/debian/ceph-quincy bookworm no-subscription" > /etc/apt/sources.list.d/ceph.list

|

bullseyeに取り残されているリポジトリが無いことを確認します。

1

|

root@pve01:~# grep -r bullseye /etc/apt/sources.list*

|

VM live migration to non-maintenance node

アップグレードするノードから稼働中のVMを別のノードに一時的に移動します。うちの場合はお手製スクリプトを実行するだけ。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

root@pve03:~# ./maintenance_migration.sh

Generated maintenance files:

- maintenance_migration_to.sh

- maintenance_migration_return.sh

Migration plan (maintenance_migration_to.sh):

qm migrate 3016 "pve01" --online

qm migrate 1074 "pve02" --online

qm migrate 2053 "pve01" --online

qm migrate 2043 "pve02" --online

qm migrate 1049 "pve01" --online

qm migrate 1036 "pve02" --online

qm migrate 1005 "pve01" --online

Do you wish to start migration ? [y/n]y

+ qm migrate 3016 pve01 --online

...

root@pve03:~# qm list | grep run

|

Upgrade the system to Debian Bookworm and Proxmox VE 8

起動中のVMが居なくなったことを qm list | grep running で確認して、アップグレードに進みます。

ダウンロードだけを並列して進めておくと、ダウンタイムは短くて済みます。

1

|

apt update && apt dist-upgrade --download-only -y

|

この後、実際にシステムの更新をしていくわけですが、sshやProxmox VEのWeb Terminalの画面は更新中に途切れる可能性があります。

今使用しているサーバーはTX1320 M2でiRMCが搭載されているので、IPMIでConsole Redirectionが使えます。

今回はipmitoolからSOL(Serial over LAN)を使用してパッケージの更新を行います。

まず、一時的にSerialポートを有効にしておきます。(ずっと有効にしておいても良いんですが)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

root@pve01:~# sudo systemctl start serial-getty@ttyS0.service

root@pve01:~# sudo systemctl status serial-getty@ttyS0.service

● serial-getty@ttyS0.service - Serial Getty on ttyS0

Loaded: loaded (/lib/systemd/system/serial-getty@.service; disabled; vendor preset: enabled)

Active: active (running) since Fri 2024-11-29 00:06:18 JST; 1s ago

Docs: man:agetty(8)

man:systemd-getty-generator(8)

http://0pointer.de/blog/projects/serial-console.html

Main PID: 2215434 (agetty)

Tasks: 1 (limit: 76985)

Memory: 208.0K

CPU: 3ms

CGroup: /system.slice/system-serial\x2dgetty.slice/serial-getty@ttyS0.service

└─2215434 /sbin/agetty -o -p -- \u --keep-baud 115200,57600,38400,9600 ttyS0 vt220

Nov 29 00:06:18 pve01 systemd[1]: Started Serial Getty on ttyS0.

|

後は適当な端末から ipmitool -I lanplus -H ${PVE_IP} -U ${IPMIUSER} -P ${IMPIPASS} sol activate のような感じで接続できます。

root ユーザーでログインして、システムを更新していきます。

途中、一部の設定ファイルの変更を確認されましたので、以下の通り回答しました。

/etc/issue はそのまま: N/etc/lvm/lvm.conf はパッケージのもので上書き: Y/etc/apt/sources.list.d/pve-enterprise.list は使わないのでそのまま: N

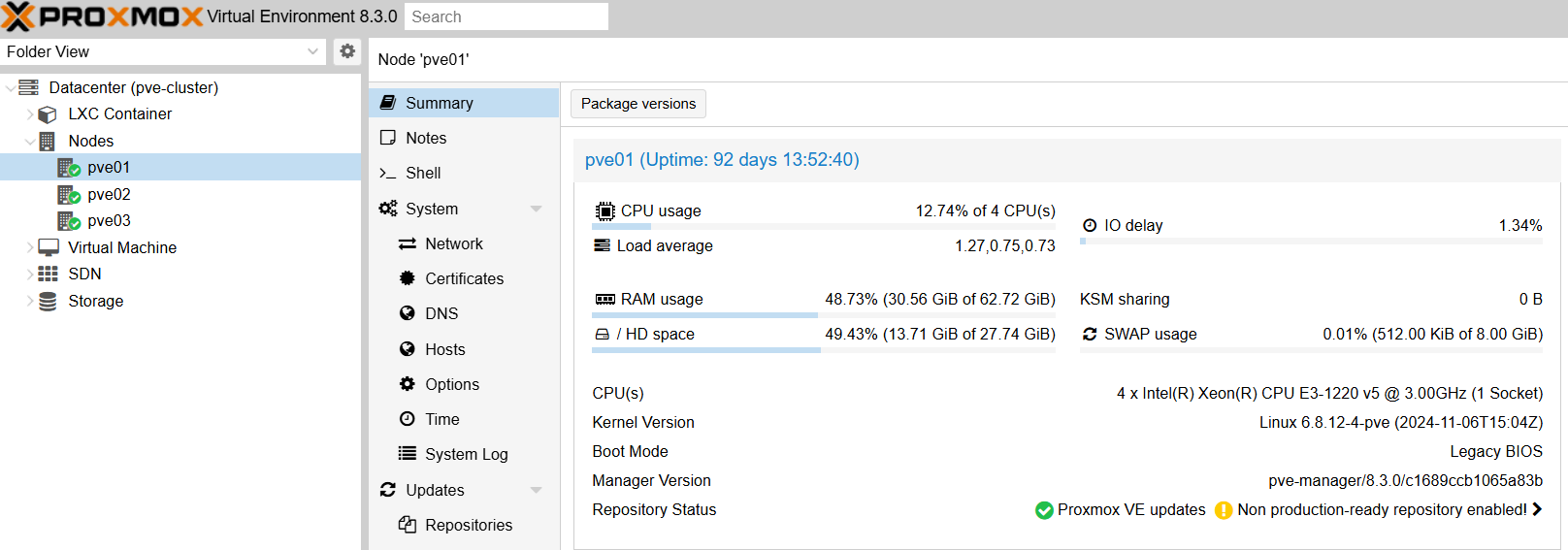

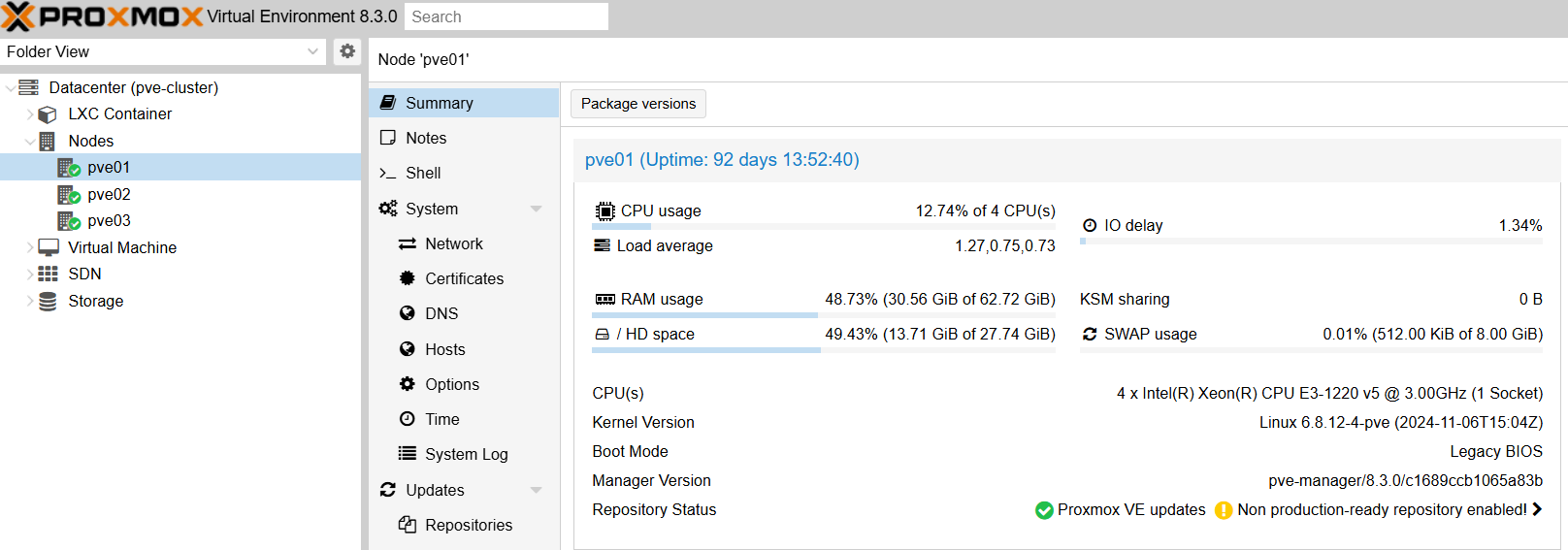

再起動後、バージョンとCephの状態に問題が無さそうなことを確認します。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

root@pve01:~# pveversion

pve-manager/8.3.0/c1689ccb1065a83b (running kernel: 6.8.12-4-pve)

root@pve01:~# ceph -s

cluster:

id: 379c9ad8-dd15-4bf1-b9fc-f5206a75fe3f

health: HEALTH_WARN

2 pools have too many placement groups

services:

mon: 3 daemons, quorum pve01,pve02,pve03 (age 3M)

mgr: pve01(active, since 3M), standbys: pve03, pve02

mds: 1/1 daemons up, 2 standby

osd: 3 osds: 3 up (since 3M), 3 in (since 2y)

data:

volumes: 1/1 healthy

pools: 4 pools, 129 pgs

objects: 259.51k objects, 976 GiB

usage: 2.3 TiB used, 3.2 TiB / 5.5 TiB avail

pgs: 129 active+clean

io:

client: 284 KiB/s rd, 1.1 MiB/s wr, 6 op/s rd, 54 op/s wr

|

あとはこれを残りのノード分繰り返せば完了です。

まぁ良いんじゃないでしょうか。